Qoria’s Michael Hyndman on advancing cybersecurity in children's digital spaces

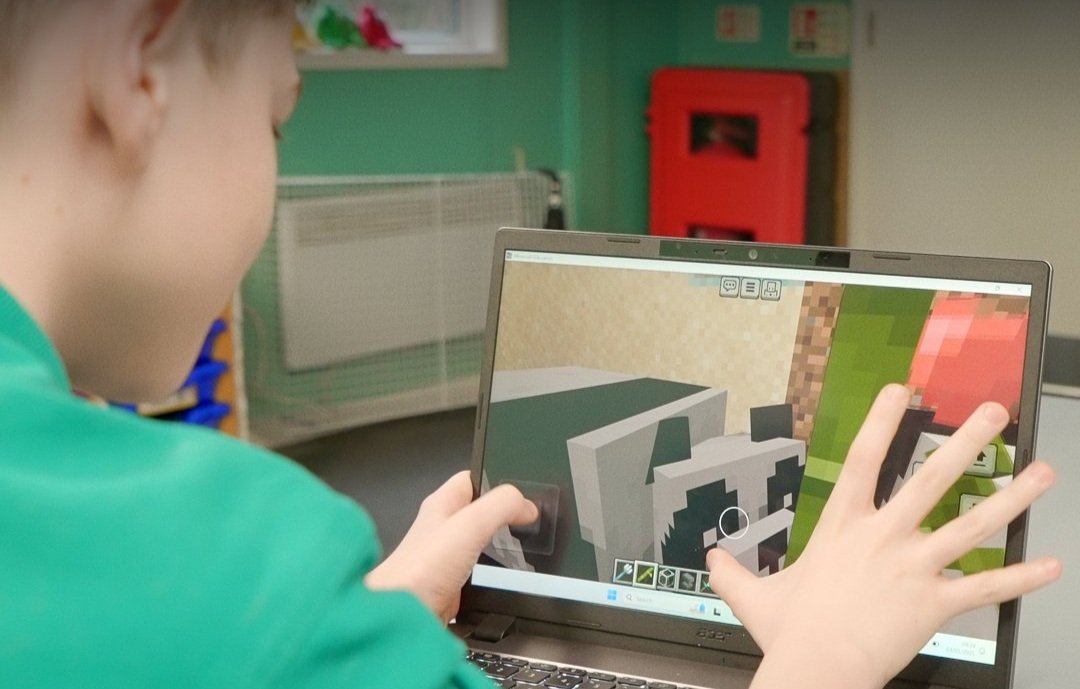

ETIH chats with Michael Hyndman, the newly appointed Chief Information Security Officer at Qoria, to discuss his crucial role in safeguarding children's digital experiences.

With an extensive background as an ethical hacker and a profound commitment to cybersecurity, Michael is driving Qoria's mission to protect young users globally.

In this exclusive interview, he shares insights into the unique challenges of the edtech sector, the evolving role of AI in cybersecurity, and his strategies for ensuring robust data protection and privacy.

ETIH: Could you describe the core mission of Qoria and how your role as CISO contributes to achieving this?

M.H.: Qoria’s mission is to see every child thrive in their digital lives. It might sound a bit cliche but the reality is that every day we have an immeasurable impact on the lives of children around the world through tools and services that prevent children from coming to significant harm to their life or health. To any parent who has to deal with the impact of such awful circumstances, our mission is anything but cliche.

As Qoria’s CISO, my role is pivotal in ensuring that we deliver on that mission by constantly improving the security of our products and business, taking a proactive approach to technology risk management, ensuring that our customers maintain agency and control over their data and that we comply with a variety of security and privacy standards. Ultimately, all of these elements work together to protect our ability to safeguard children and students.

ETIH: What are some of the unique cybersecurity challenges that companies like Qoria face in the edtech sector?

M.H.: In my view, the biggest security challenge for the edtech sector over the past few years has been the downward pressure on security funding since the post-pandemic tech bubble burst between 2021 and 2023, which has led to staff layoffs and increased pressure on security teams to do more with less.

This is a challenge because the best chance an organisation has at improving its security is by attracting and retaining the right cybersecurity talent. Failing to do so negatively impacts a company's ability to reduce risk effectively. I worry and have seen the anecdotal evidence, that this downward pressure across the industry has actually increased the risk profile of a number of SaaS and edtech companies.

At Qoria, however, I have been immensely thankful to see both my security budget and team count grow during my tenure as Vice President of Information Security.

Another significant challenge for the sector is the role that edtech companies perform when it comes to the custodianship of education and student data. Typically edtech companies perform the GDPR designated role and responsibility of data processor, and while regulations like GDPR have really set the standard for ensuring the rights of data subjects, the tech industry at large still needs to gain a better handle on the practical aspects of data custodianship.

You might recall in 2020, an internal document was leaked from Facebook and published in the media which exposed the fact that Facebook fundamentally had no idea where its user data went and what was being done with it.

This is really just one example of a problem that plagues the tech industry at large where companies “think” they know what data they hold, but they don’t actually have sufficient governance or technical systems in place to “know” with a high degree of confidence what data they actually store and process. This problem exists in part because it is a very complex problem to solve.

However, the issue of “thinking but not actually knowing” has really come to the fore in the last few years, where large companies have faced serious consequences because they discovered, following a breach, that they were storing data that they were unaware of. Unfortunately by that stage, it was too late - the data they should not have been storing was stolen and up for sale on the dark web.

At Qoria, we’ve taken a very proactive approach to this issue and invested in systems that give us a high degree of visibility and confidence around what data records we hold so that we can ensure that we are only storing and processing the data that we should be, and in a way that meets customers security and privacy expectations.

How do you see AI evolving in the role of cybersecurity within edtech over the next few years?

M.H.: AI is bringing about a paradigm shift for many areas of education including pedagogy, student wellbeing and the security of teaching & learning environments.

For the cybersecurity space specifically, AI is allowing people to work faster, and smarter. As a CISO I’m seeing AI already enhance almost every function and role within security, including security engineering, GRC, data privacy, security operations and more.

Perhaps my biggest concern is how AI is accelerating the speed and impact of threats. Having experience as an ethical hacker and bug bounty hunter for several years, I’ve seen this impact firsthand. Recently, I hacked into Google’s internal network and realised just how much generative AI is accelerating real-world threats.

With generative AI I was able to shorten my exploitation development time by at least a week and write more advanced code than I could have previously. Thanks to generative AI, building and delivering exploits is now far more accessible and scalable than it was previously.

As a leader in cybersecurity, what major trends do you foresee in edtech that could influence security strategies?

M.H.: Emerging Data Privacy and AI regulations will influence edtech security strategies in the coming years. The US in particular, where many edtech providers are based, is introducing both state and federal privacy laws, some of which are specific to the edtech industry. Staying across these is important to remain trusted in local markets and as I touched on earlier, complying with these requirements demands more than theoretical application, it requires good leadership, good understanding, and good internal practice.

With AI at the forefront of innovation, edtech companies that handle education or student data have a responsibility to ensure this data is not exploited and that data subjects are not harmed.

The risk of unintentional harm from AI innovations is real, as evidenced by the negative impacts seen from technologies such as social media algorithms. To mitigate this risk, edtech companies should have in place an internal ethics policy and framework, established and owned by the CEO.

Such frameworks provide clear guidelines on the ethical development of new features including AI to ensure that innovation takes place without causing unintended harm. Although this is more of a broader safety consideration than a security consideration, many edtech companies will be relying on their security, privacy and risk teams as key stakeholders to ensure that these considerations are instilled into strategies.